You must make sure that search engine bots may explore your most crucial pages in order to improve your website's optimisation. To help with that, there is a file called robots.txt. Most search engine bots may be guided to the webpages you want them to index with the help of this file.

(toc)

What Is Blog Robots.txt File and Why Do You Need It?

Robots.txt is a file containing instructions for search engine robots, telling them whether to crawl or avoid certain web pages, uploaded files, or URL parameters. Simply put, the robots.txt file acts like a guide for crawlers, saying, "You can view this section of the website or blog, but not that section."

To understand its importance for website optimisation, When someone creates a new website, search engines send their crawlers to discover and collect information required to index a page. Once web crawlers find information such as keywords and fresh content, they add the webpage to the search index.

(ads)

Without a robots.txt file, bots may index pages not meant for public view or even fail to visit your most important pages. Moreover, modern websites contain more than just web pages. For example, if you're using WordPress, you’ll likely install plugins. These plugins create directories that might appear in search results but are irrelevant to your website's content.

Additionally, without a robots.txt file, excessive bot activity can slow down your website's performance.

How Does Robots.txt Work?

When you create a WordPress website, it automatically generates a virtual robots.txt file located in your site's main folder. To view it, simply add /robots.txt after your domain name.

For example:

https://yourwebsite.com/robots.txt

Blogger Custom Robots.txt for Better SEO

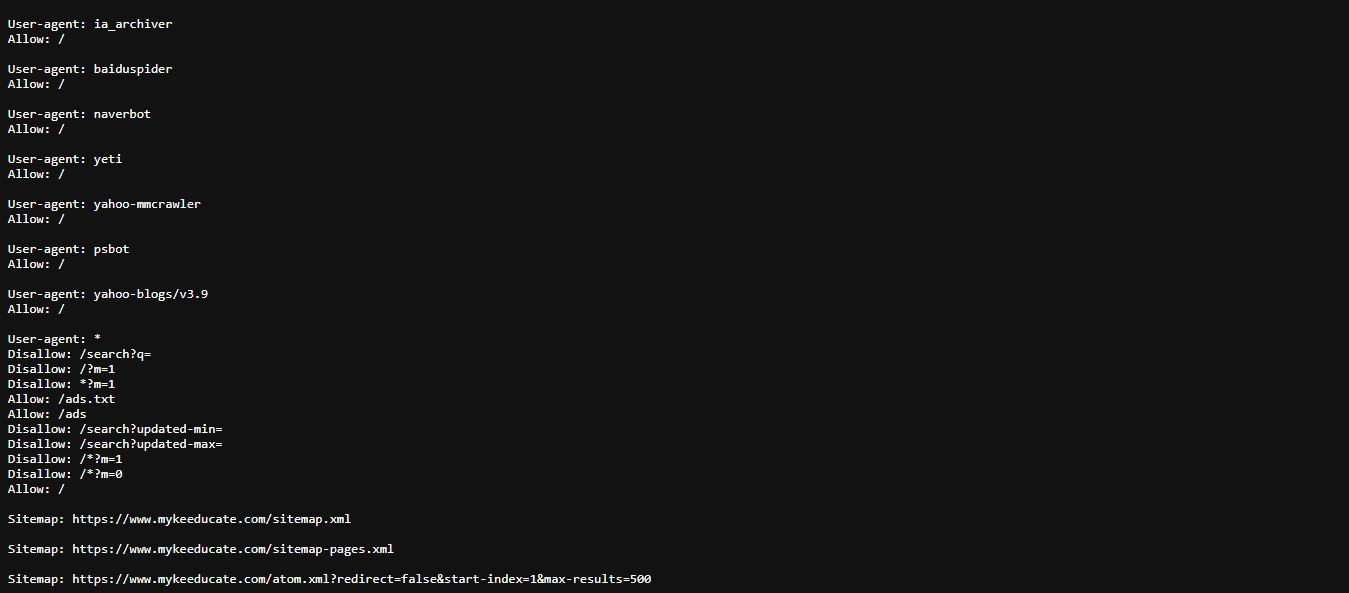

Samples of robots.txt file for Blogger. Change https://www.example.com to your blog or website domain. Example: https://mykeeducate.com/sitemap.xml

This robots.txt below will allow Search Engine Bots to submit only the first 25 posts.

User-agent: Mediapartners-Google

Disallow:

User-agent: *

Disallow: /search

Disallow: ?updated-max

Allow: /

Sitemap:

https://www.example.com/sitemap.xml

This robots.txt below will allow Search Engine Bots to submit only the first 25 posts including pages.

User-agent: Mediapartners-Google

Disallow:

User-agent: *

Disallow: /search

Disallow: ?updated-max

Allow: /

Sitemap:

https://www.example.com/sitemap.xml

Sitemap: https://www.example.com/sitemap-pages.xml

(ads)

This robots.txt below will allow Search Engine Bots to submit only the first 500 posts including pages on your blog or website.

User-agent: Mediapartners-Google

Disallow:

User-agent: *

Disallow: /search

Disallow: ?updated-max

Allow: /

Sitemap:

https://www.example.com/atom.xml?redirect=false&start-index=1&max-results=500

Sitemap: https://www.example.com/sitemap-pages.xml

Note: Remember to replace example.com with the URL of your choice, and we recommend you use the third robots.txt code. It allows better results for any blog or website.

Testing Your Robots.txt File

Every time you update your robots.txt file, test it using tools like Google Search Console's Robots.txt Tester. This ensures there are no mistakes that could harm your site's SEO.

Mykeeducatedotcom

By now, you should understand what the robots.txt file is, how it works, and how to create one to improve your site's SEO. A well-crafted robots.txt file is essential for fast Google indexing and protecting sensitive directories.

If you have questions, feel free to leave them in the comments below. Don’t forget to like this post and subscribe for more tips on SEO and website optimization. Good luck with your online journey!

(ads)

.jpg)

* Please Don't Spam Here. All the Comments are Reviewed by Admin.